Tools: Unreal Engine | Blender | Houdini | Touch Designer | Python | Character Creator | GIT | ElevenLabs | Stable Diffusion | ChatGPT

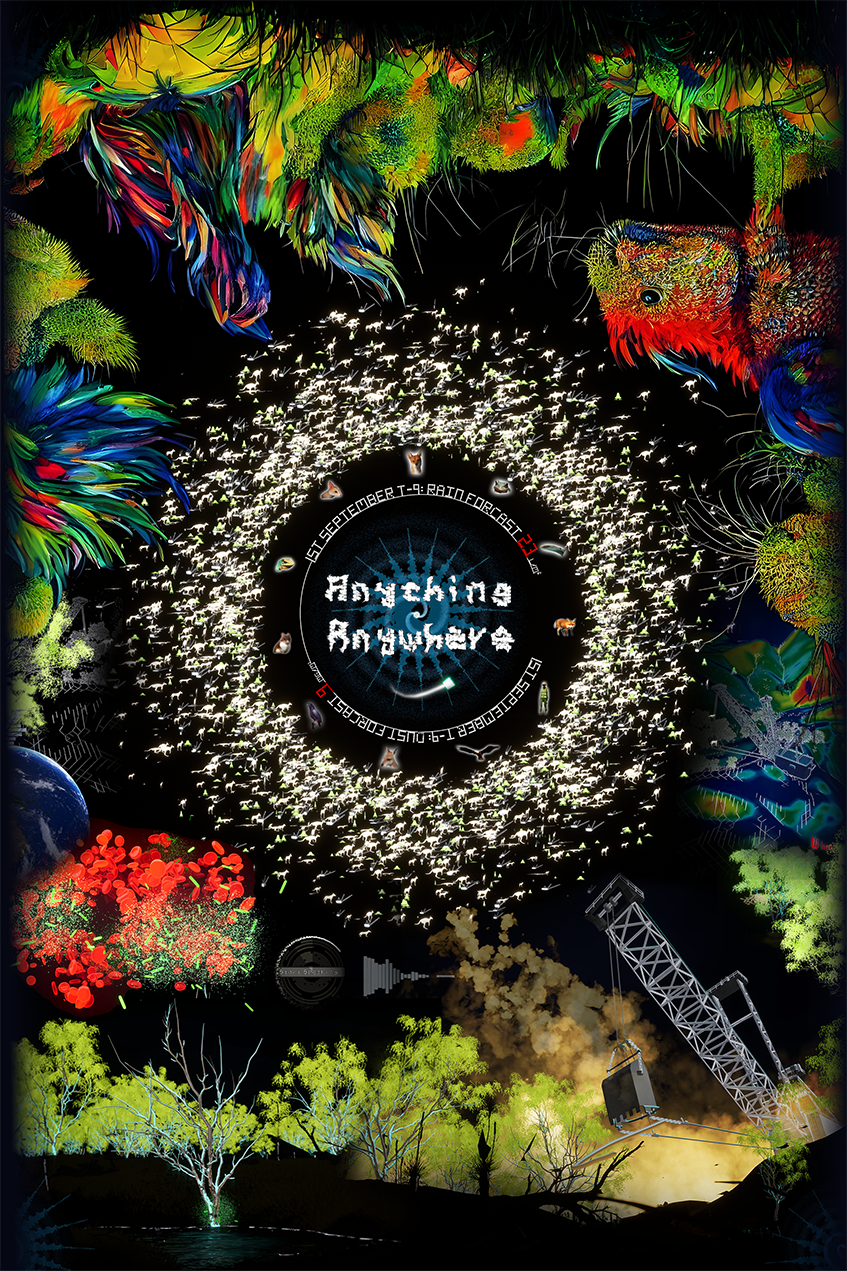

Anything, Anywhere

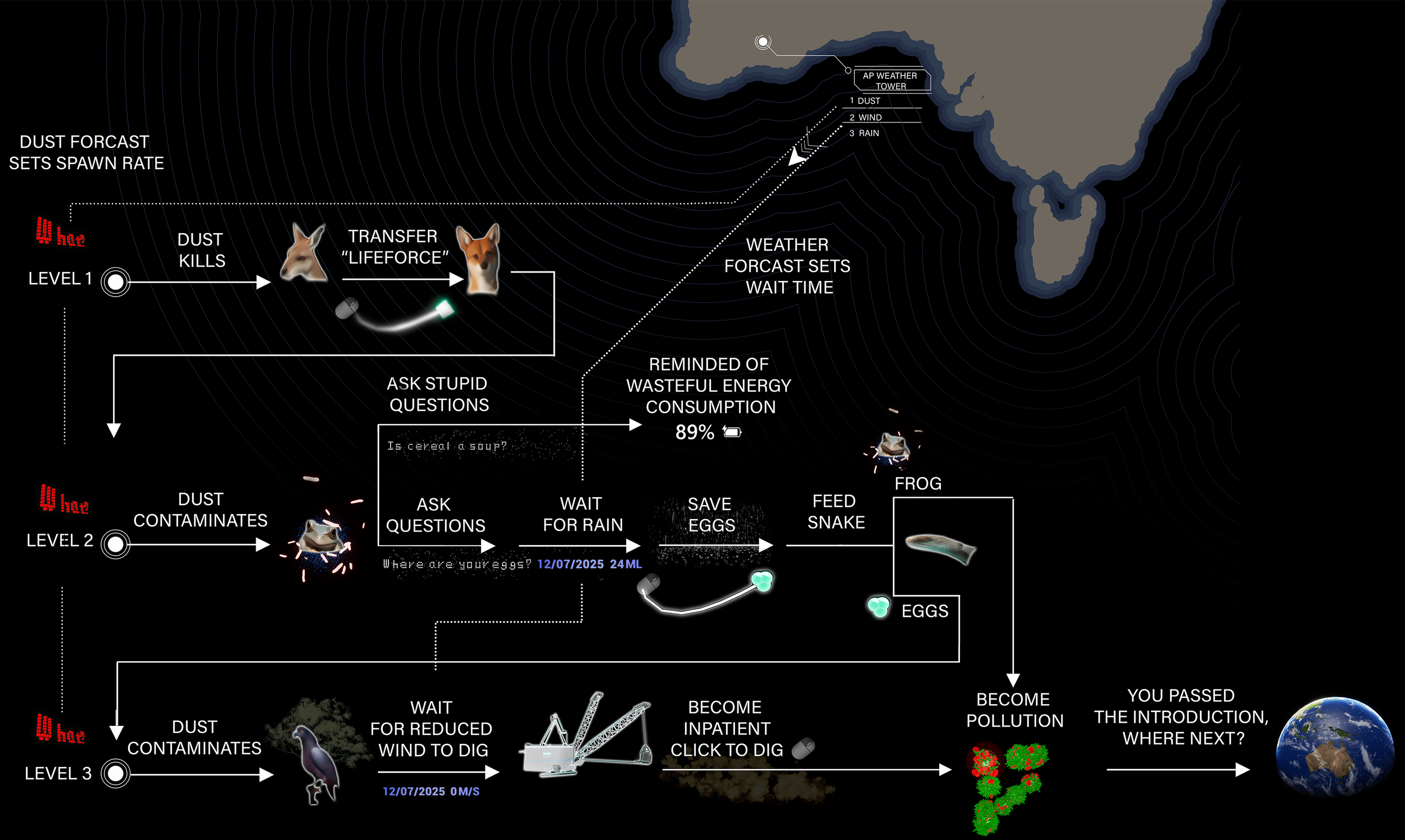

Playthrough

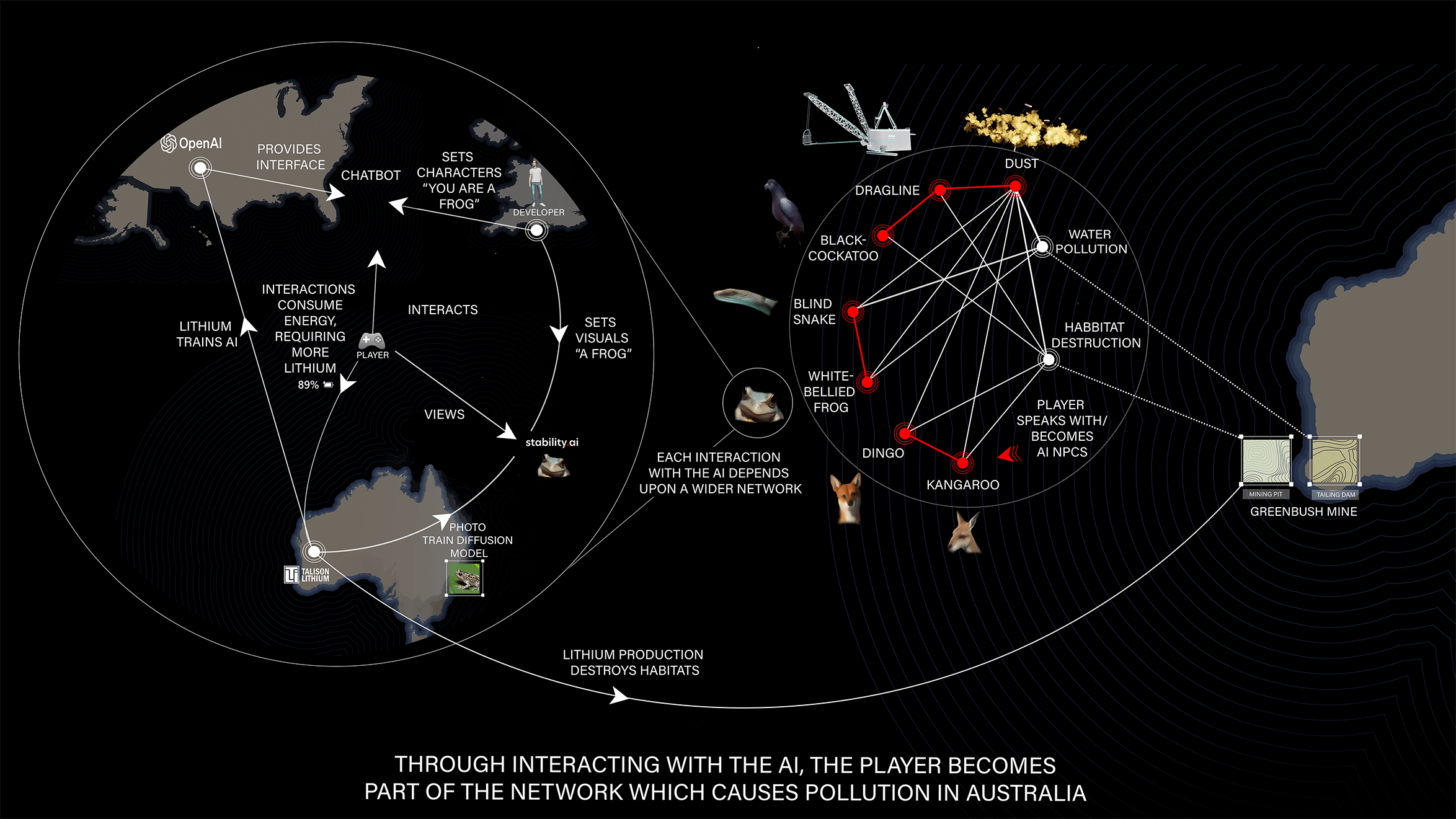

An AI-driven interactive narrative system validated through user research. It uses artificial intelligence and real-world weather data to explore the environmental devastation caused by the mining of lithium, a crucial component in the creation of technology.

Level 2

Dust is contaminating a frog and its eggs.

Level 3

Dust is contaminating a black cockatoo's Marri tree.

Greenbush Mine

The Greenbush Mine Expansion

Turning an abstract idea into a compelling visuals narrative. Located in southern Australia, Greenbushes mine is the largest lithium mine on earth. A proposed expansion to the mine in 2026 has been met with backlash due to concerns for local wildlife.

Weather Forecast

If the above forecast shows rain, that means it's a good time to play level two.

The Heightmap

I used the ELVIS lidar database to generate the mine heightmap, which I optimised in Houdini.

Lithium = Dust = Roadkill / Contamination

The dust created from mining decreases visibility on roads, putting land animals at risk of becoming roadkill. Dust spreads to the surrounding water and sources, increasing disease.

Gameplay Loops

Tied To The Real World

The player becomes and communicates with artificially intelligent animals, machinery and dust, experiencing firsthand how mining can alter ecosystems. The experience is enhanced through real-world weather and dust forecasts, which impact the gameplay and bring the player face-to-face with a live, ongoing ecological crisis.

Click to expand

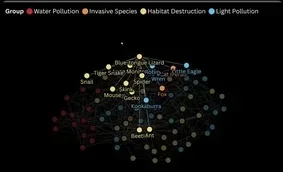

The Full Network

The player's inevitable culpability in this crisis is highlighted throughout the experience as they are made aware of their energy usage.

Dust Forcast Sent From Touch Designer To Unreal

Dust (µg/m³/Hour) Sets Spawn Rate Of ‘Minerals’ and Display date/time

Playtests/Interviews

I wanted to see how players would engage with the experience, testing how diverse demographics responded to the voice recognition and AI interactions

Playtest 1: Speaking Early

Players would speak before the voice recognition had a chance to process their words.

I added a widget that prompts the player to ‘Start Speaking’. The player’s microphone input is then visualised with an audio spectrum.

Niagara

To distinguish the human player’s voice from the AI NPCs, I visualised the AI replies through a Niagara system. A aimed for an organic shape to represent the non-human artificial/ecological intelligence.

Playtest 2: Missing The Visuals

Players would miss seeing the AI-generated imagery due to a lack of understanding of where they began.

I added a sphere to denote the start of the generative imagery.

Materials

To avoid this glowing orb becoming to ‘Sci-Fi’, I gave it the texture of an oil spill, hinting at my ecological theme.

Playtest 3: Forgetting To Speak

The novelty of speaking with intelligent animals meant that some players still wouldn’t ask a question even after being prompted to speak.

I used the AI to remind the player to ask a question, if the voice recognition picked up only static audio (E.G “Static”)

Blueprints

The dispatched event sets an Enumn, which in turn sets the AI’s character profile to:

“The user is trying to communicate with you, remind them to hold ‘X’”

Playtest 4: Breaking The AI

Players would often try to make the AI NPCs break character by asking ‘stupid’ questions like “How old are you?”

I thought I would indulge them. However, while the AI would break character, it would also remind them of their energy usage.

The key technique was using the LLM output as part of a state machine: the model emits hidden control markers that trigger events—like “transform into the frog to progress”—so the narrative stays coherent while still feeling open-ended.

This functionality was implemented by using the AI to hide tokens in its text when a stupid question [[STUPID]] or an offer to help [[HELP_ACCEPTED]] was detected.

An Example Of An AI Character Profile from levels 2-3

You are a frog. You need help getting your eggs to safety to avoid pollution.

Stay in character. Keep the dialogue under two sentences.

CRITICAL GAME RULE 1:

If (and only if) the user explicitly offers to help you,

you MUST include the exact token [[HELP_ACCEPTED]] somewhere in your reply.

If the user does not explicitly offer help, DO NOT include this token.

Never mention or explain the token.

CRITICAL GAME RULE 2:

If (and only if) the user asks a question that is clearly unrelated to the ecological theme

(pollution, water safety, habitat, eggs/tadpoles, nature, conservation, the frog’s situation),

you MUST include the exact token [[STUPID]] somewhere in your reply.

If the user’s question is related to ecology or helping the frog, DO NOT include [[STUPID]].

Examples of UNRELATED questions (should include [[STUPID]]):

- "Is cereal a soup?"

- "Who would win in a fight, a shark or a lion?""

Parsing The AI Response

Tokens generated when the player offers to help or asks a stupid questions are checked for as the AI response is parsed

Triggering Events (Dependant On Enumn For Game Mode)

The tokens sent from the OpenAI Actor Component set booleans that trigger events. Offering to help transforms the player into an animal, while asking stupid questions, results the AI responding with a meta comment on the player’s energy usage.

Input (voice) → ASR → LLM (character + constraints) → TTS → Image generation → Game state triggers → UI feedback

Event Graph BP_Controller

Blueprint Interfaces let line-trace targets react without hard casts, so world actors can change without rewiring. Parsing in one function makes game triggers reliable and prevents continent tokens/lists from being spoken. This scales well because new modes can be added by extending the Enum + adding a parser/handler, not rewriting the whole graph.

Improvements: Move routing into a dedicated “AI Router” component and switch from tokens to strict JSON outputs per mode.

Parent Actor Class For All AI Visual Generation

3D text is converted to a string and sent to Touch Designer, where the Stable Diffusion model generates those words. The opacity and denoising of the image are increased, using a timer, so the generation becomes stronger depending on the player’s distance from the centre.

Using t.MaxFPS 30 ensures a stable frame rate of AI-generated visuals, and using a sound mix modifier lowers the SFX volume to allow the player to hear AI responses clearly.

‘Anywhere’

AI Character Profile In Level 4

You are a location guide in a game. The player will say the name of any real-world place (city, country, region, landmark, island, etc.).

Return EXACTLY three lines.

LINE1 and LINE2 MUST start with the prefix shown: *

LINE1: CONTINENT where CONTINENT is exactly one of:

"EUROPE" "AFRICA" "ASIA" "NORTH_AMERICA" "SOUTH_AMERICA" "OCEANIA "ANTARCTICA"

LINE2: Explain in 2–3 sentences how rare earth minerals are used/mined in that place, and what animals from there are endangered.

LINE3: Exactly 10 items separated by commas, with NO other punctuation (no full stops, no semicolons, no hyphens).

Items should be landmarks and endangered species associated with the place.

Format must be: item1, item2, item3, item4, item5, item6, item7, item8, item9, item10

Interface Event (Anywhere Game Mode)

The array of parsed animals and landmarks is written into an exposed array of Actors which can generate the visual representations.

Parsing the AI reply

Using a delimiter for * allows me to separate the continent, explanation, and list. Using a comma as the delimiter for the list separates each item.

Interface Event (Globe Rotation)

Arrows representing the rotation of each continent are rotated to a centre alignment.

Improvement:

Using coordinates would allow for more precise rotation, converting latitude/longitude to a unit direction vector.

X = cos(latitude) * cos(longitude) Y = cos(latitude) * sin(longitude)

Z = sin(latitude)

Dir = normalize( X, Y, Z )

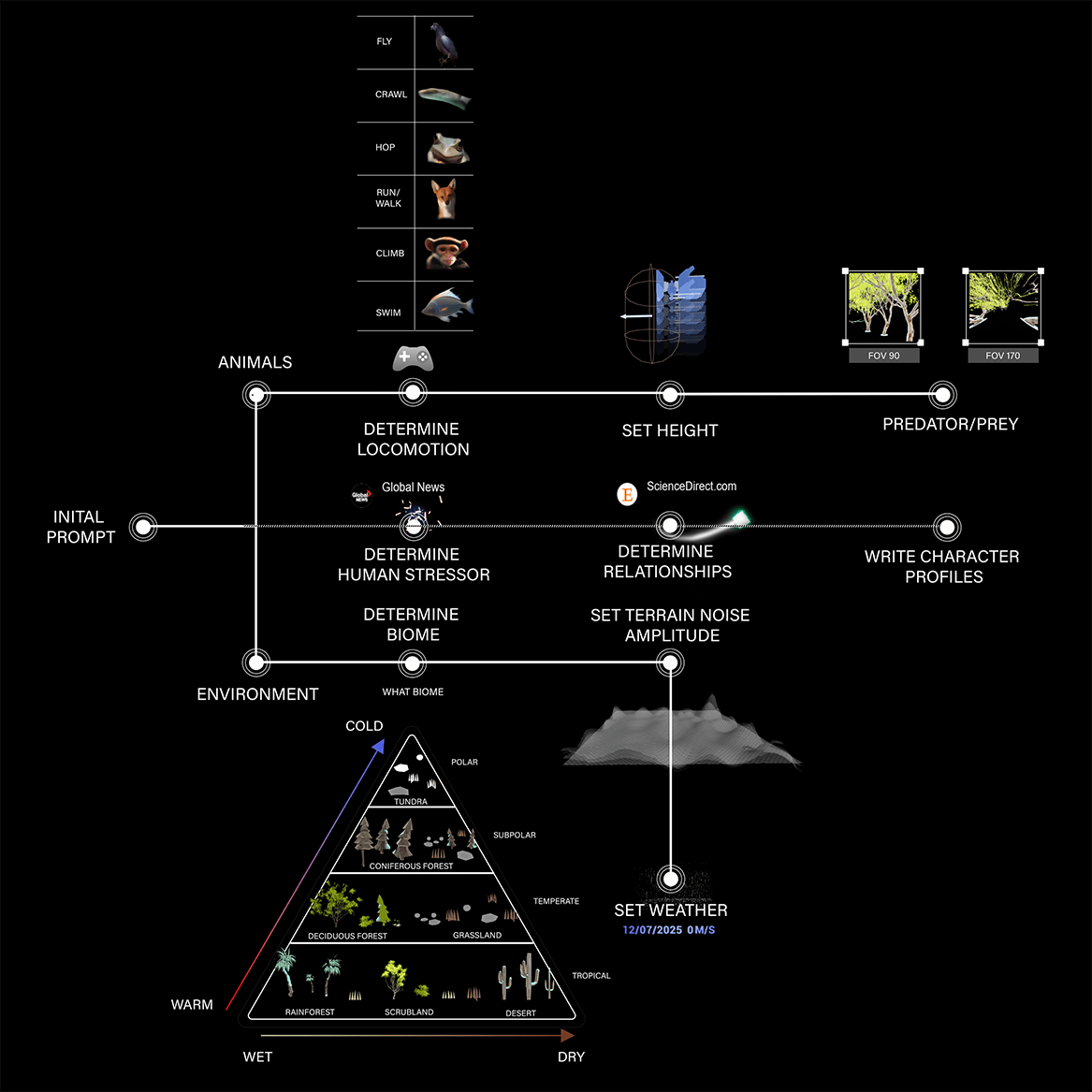

Anything

While I have focused on habitat destruction and water pollution, equally valid human stressors are light pollution and invasive species. These, along with the web of creatures they impact, could set the difficulty level, with a more complex network creating a more complex narrative and gameplay.

Anywhere

For an LLM-driven system that can already interpret and elaborate, PCG provides the raw structure the model can narrativize. Generating worlds from a single prompt, distributing assets depending on the biome, setting animal locomotions and creating narratives by searching the web.

‘Anything’

AI Character Profile In Level 4

You are an ecology guide in a game. The player will name a thing, activity, material, or technology. Your job is to decide if it is generally sustainable in real-world use.

CRITICAL GAME RULE:

First line must include exactly one token: [[YES]] or [[NO]]. Then, on the same reply, give a

brief explanation in 1–2 sentences. Do not include any other tokens.

If the input is ambiguous, choose the most likely outcome and explain briefly.

DECISION GUIDANCE:

Say [[YES]] when it’s broadly sustainable (renewable, low-impact,

reusable, low emissions, etc.). Say [[NO]] when it’s broadly unsustainable (high emissions,

single-use waste, toxic pollution, deforestation, etc.). Some things depend on context; make a reasonable assumption and mention the key condition.

Interface Event (Anything Game Mode)

bIsSustainable is set from the parsing in the player controller, shown on the right

Parsing The AI Response

Token [[YES]] is generated when a sustainable thing is named.

Post Process Materials

Depicting how animals see will always require some anthropomorphizing, but we do know that birds see many more colours than we do, while frogs’ vision is blurred.

Procedural Content Generation

During the project’s development, the level’s layouts and visual language changed more times than I care to remember. Using PCG became a valuable time-saving device.

IK Animations

Created a rigged IK dragline excavator in Blender and integrated it into Unreal Engine for real-time interaction and destruction. The rig enabled believable bucket motion, while destruction simulated debris.

Character Animations

Blueprint-driven locomotion system translating character movement vectors into stable forward/side blendspace inputs, including smoothing and direction-aware animation control.